Embracing AI: A Case for AI Accelerationism

Why the AI decel movement is dangerous and bad for our future

In an era where artificial intelligence (AI) development is at the forefront of technological innovation, a counter-narrative championed by a group I refer to as the 'AI Decels'—those advocating for the deceleration of AI advancements— seems to be gaining significant traction. After tuning into a recent episode of the Joe Rogan Podcast, I realized that the prevailing narrative around AI was heading in a dangerous direction. Rogan had Aza Raskin and Tristan Harris, technology safety advocates, who released a talk called 'The AI Dilemma,' on for a discussion. You may know them from the popular documentary 'The Social Dilemma' on the dangers of social media. It became increasingly clear that the cautionary stance dominating this discourse might be tipping the scales too far, veering towards an over-regulated future that stifles innovation rather than fostering it.

Are we moving too fast?

While acknowledging AI's benefits, Aza and Tristan fear it could be dangerous if not guided by ethical standards and safeguards. They believe AI development is moving too quickly and that the right incentives for its growth are not in place. They are concerned about the possibility of "civilizational overwhelm," where advanced AI technology far outpaces 21st-century governance. They fear a scenario where society and its institutions cannot manage or adapt to the rapid changes and challenges introduced by AI.

They argue for regulating and slowing down AI development due to rapid, uncontrolled advancement driven by competition among companies like Google, OpenAI, and Microsoft. They claim this race can lead to unsafe releases of new technologies, with AI systems exhibiting unpredictable, emergent behaviors, posing significant societal risks. For instance, AI can inadvertently learn tasks like sentiment analysis or human emotion understanding, creating potential for misuse in areas like biological weapons or cybersecurity vulnerabilities.

Moreover, AI companies' profit-driven incentives often conflict with the public good, prioritizing market dominance over safety and ethics. This misalignment can lead to technologies that maximize engagement or profits at societal expense, similar to the negative impacts seen with social media. To address these issues, they suggest government regulation to realign AI companies' incentives with safety, ethical considerations, and public welfare. Implementing responsible development frameworks focused on long-term societal impacts is essential for mitigating potential harm.

This isn't new

Though the premise of their concerns seems reasonable, it's dangerous and an all too common occurrence with the emergence of new technologies. For example, in their example in the podcast, they refer to the technological breakthrough of oil. Oil as energy was a technological marvel and changed the course of human civilization. The embrace of oil — now the cornerstone of industry in our age — revolutionized how societies operated, fueled economies, and connected the world in unprecedented ways. Yet recently, as ideas of its environmental and geopolitical ramifications propagated, the narrative around oil has shifted.

Tristan and Aza detail this shift and claim that though the period was great for humanity, we didn't have another technology to go to once the technological consequences became apparent. The problem with that argument is that we did innovate to a better alternative: nuclear. However, at its technological breakthrough, it was met with severe suspicions, from safety concerns to ethical debates over its use. This overregulation due to these concerns caused a decades-long stagnation in nuclear innovation, where even today, we are still stuck with heavy reliance on coal and oil. The scare tactics and fear-mongering had consequences, and, interestingly, they don't see the parallels with their current deceleration stance on AI.

These examples underscore a critical insight: the initial anxiety surrounding new technologies is a natural response to the unknowns they introduce. Yet, history shows that too much anxiety can stifle the innovation needed to address the problems posed by current technologies. The cycle of discovery, fear, adaptation, and eventual acceptance reveals an essential truth—progress requires not just the courage to innovate but also the resilience to navigate the uncertainties these innovations bring.

Moreover, believing we can predict and plan for all AI-related unknowns reflects overconfidence in our understanding and foresight. History shows that technological progress, marked by unexpected outcomes and discoveries, defies such predictions. The evolution from the printing press to the internet underscores progress's unpredictability. Hence, facing AI's future requires caution, curiosity, and humility. Acknowledging our limitations and embracing continuous learning and adaptation will allow us to harness AI's potential responsibly, illustrating that embracing our uncertainties, rather than pretending to foresee them, is vital to innovation.

The journey of technological advancement is fraught with both promise and trepidation. Historically, each significant leap forward, from the dawn of the industrial age to the digital revolution, has been met with a mix of enthusiasm and apprehension. Aza Raskin and Tristan Harris's thesis in the 'AI Dilemma' embodies the latter.

Who defines "safe?"

When slowing down technologies for safety or ethical reasons, the issue arises of who gets to define what "safe" or “ethical” mean? This inquiry is not merely technical but deeply ideological, touching the very core of societal values and power dynamics. For example, the push for Diversity, Equity, and Inclusion (DEI) initiatives shows how specific ideological underpinnings can shape definitions of safety and decency.

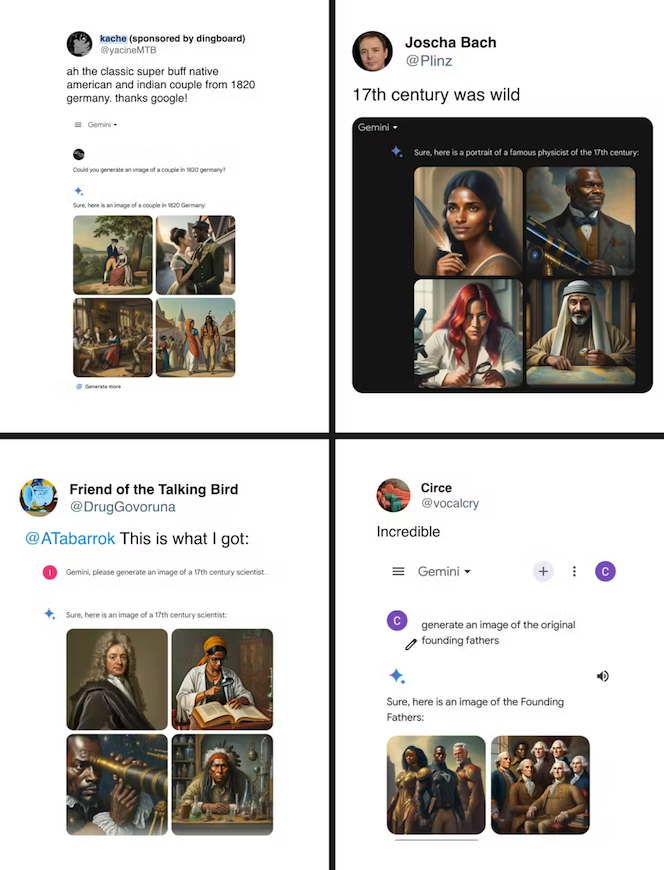

Take the case of the initial release of Google's AI chatbot, Gemini, which chose the ideology of its creators over truth. Luckily, the answers were so ridiculous that the pushback was sudden and immediate. My worry, however, is if, in correcting this, they become experts in making the ideological capture much more subtle. Large bureaucratic institutions' top-down safety enforcement creates a fertile ground for ideological capture of safety standards.

I claim that the issue is not the technology itself but the lens through which we view and regulate it. Suppose the gatekeepers of 'safety' are aligned with a singular ideology. In that case, AI development would skew to serve specific ends, sidelining diverse perspectives and potentially stifling innovative thought and progress.

In the podcast, Tristan and Aza suggest such manipulation as a solution. They propose using AI for consensus-building and creating "shared realities" to address societal challenges. In practice, this means that when individuals' viewpoints seem to be far apart, we can leverage AI to "bridge the gap." How they bridge the gap and what we would bridge it toward is left to the imagination, but to me, it is clear. Regulators will inevitably influence it from the top down, which, in my opinion, would be the opposite of progress.

In navigating this terrain, we must advocate for a pluralistic approach to defining safety, encompassing various perspectives and values achieved through market forces rather than a governing entity choosing winners. The more players that can play the game, the more wide-ranging perspectives will catalyze innovation to flourish.

Ownership & Identity

Just because we should accelerate AI forward does not mean I do not have my concerns. When I think about what could be the most devastating for society, I don't believe we have to worry about a Matrix-level dystopia; I worry about freedom. As I explored in "Whose data is it anyway?," my concern gravitates toward the issues of data ownership and the implications of relinquishing control over our digital identities. This relinquishment threatens our privacy and the integrity of the content we generate, leaving it susceptible to the inclinations and profit of a few dominant tech entities.

To counteract these concerns, a paradigm shift towards decentralized models of data ownership is imperative. Such standards would empower individuals with control over their digital footprints, ensuring that we develop AI systems with diverse, honest, and truthful perspectives rather than the massaged, narrow viewpoints of their creators. This shift safeguards individual privacy and promotes an ethical framework for AI development that upholds the principles of fairness and impartiality.

As we stand at the crossroads of technological innovation and ethical consideration, it is crucial to advocate for systems that place data ownership firmly in the hands of users. By doing so, we can ensure that the future of AI remains truthful, non-ideological, and aligned with the broader interests of society.

But what about the Matrix?

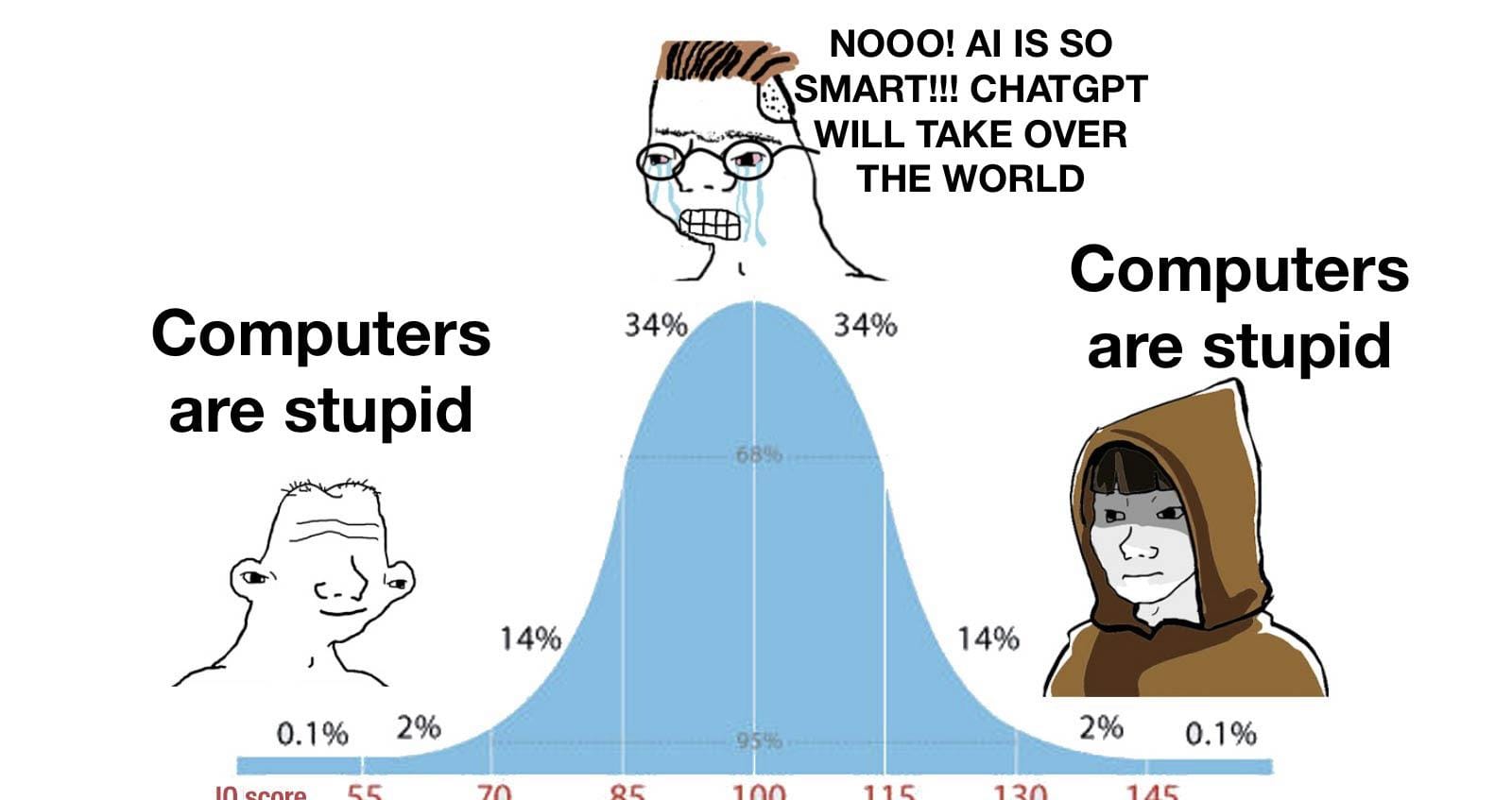

I know I am in the minority on this, but I feel that the concerns of AGI (Artificial General Intelligence) are generally overblown. I am not scared of reaching the point of AGI, and I think the idea that AI will become so intelligent that we will lose control of it is unfounded and silly. Reaching AGI is not reaching consciousness; being worried about it spontaneously gaining consciousness is a misplaced fear. It is a tool created by humans for humans to enhance productivity and achieve specific outcomes.

At a technical level, large language models (LLMs) are trained on extensive datasets and learning patterns from language and data through a technique called "unsupervised learning" (meaning the data is untagged). They predict the next word in sentences, refining their predictions through feedback to improve coherence and relevance. When queried, LLMs generate responses based on learned patterns, simulating an understanding of language to provide contextually appropriate answers. They will only answer based on the datasets that were inputted and scanned.

AI will never be "alive," meaning that AI lacks inherent agency, consciousness, and the characteristics of life, not capable of independent thought or action. AI cannot act independently of human control. Concerns about AI gaining autonomy and posing a threat to humanity are based on a misunderstanding of the nature of AI and the fundamental differences between living beings and machines. AI spontaneously developing a will or consciousness is more similar to thinking a hammer will start walking than us being able to create consciousness through programming. Right now, there is only one way to create consciousness, and I'm skeptical that is ever something we will be able to harness and create as humans. Irrespective of its complexity — and yes, our tools will continue to become evermore complex — machines, specifically AI, cannot transcend their nature as non-living, inanimate objects programmed and controlled by humans.

The advancement of AI should be seen as enhancing human capabilities, not as a path toward creating autonomous entities with their own wills. So, while AI will continue to evolve, improve, and become more powerful, I believe it will remain under human direction and control without the existential threats often sensationalized in discussions about AI's future.

With this framing, we should not view the race toward AGI as something to avoid. This will only make the tools we use more powerful, making us more productive. With all this being said, AGI is still much farther away than many believe.

Today's AI excels in specific, narrow tasks, known as narrow or weak AI. These systems operate within tightly defined parameters, achieving remarkable efficiency and accuracy that can sometimes surpass human performance in those specific tasks. Yet, this is far from the versatile and adaptable functionality that AGI represents.

Moreover, the exponential growth of computational power observed in the past decades does not directly translate to an equivalent acceleration in achieving AGI. AI's impressive feats are often the result of massive data inputs and computing resources tailored to specific tasks. These successes do not inherently bring us closer to understanding or replicating the general problem-solving capabilities of the human mind, which again would only make the tools more potent in our hands.

While AI will undeniably introduce challenges and change the aspects of conflict and power dynamics, these challenges will primarily stem from humans wielding this powerful tool rather than the technology itself. AI is a mirror reflecting our own biases, values, and intentions. The crux of future AI-related issues lies not in the technology's inherent capabilities but in how it is used by those wielding it. This reality is at odds with the idea that we should slow down development as our biggest threat will come from those who are not friendly to us.

AI Beget's AI

While the unknowns of AI development and its pitfalls indeed stir apprehension, it's essential to recognize the power of market forces and human ingenuity in leveraging AI to address these challenges. History is replete with examples of new technologies raising concerns, only for those very technologies to provide solutions to the problems they initially seemed to exacerbate. It looks silly and unfair to think of fighting a war with a country that never embraced oil and was still primarily getting its energy from burning wood.

The evolution of AI is no exception to this pattern. As we venture into uncharted territories, the potential issues that arise with AI—be it ethical concerns, use by malicious actors, biases in decision-making, or privacy intrusions—are not merely obstacles but opportunities for innovation. It is within the realm of possibility, and indeed, probability, that AI will play a crucial role in solving the problems it creates. The idea that there would be no incentive to address and solve these problems is to underestimate the fundamental drivers of technological progress.

Market forces, fueled by the demand for better, safer, and more efficient solutions, are powerful catalysts for positive change. When a problem is worth fixing, it invariably attracts the attention of innovators, researchers, and entrepreneurs eager to solve it. This dynamic has driven progress throughout history, and AI is poised to benefit from this problem-solving cycle.

Thus, rather than viewing AI's unknowns as sources of fear, we should see them as sparks of opportunity. By tackling the challenges posed by AI, we will harness its full potential to benefit humanity. By fostering an ecosystem that encourages exploration, innovation, and problem-solving, we can ensure that AI serves as a force for good, solving problems as profound as those it might create. This is the optimism we must hold onto—a belief in our collective ability to shape AI into a tool that addresses its own challenges and elevates our capacity to solve some of society's most pressing issues.

An AI Future

The reality is that it isn't whether AI will lead to unforeseen challenges—it undoubtedly will, as has every major technological leap in history. The real issue is whether we let fear dictate our path and confine us to a standstill or embrace AI's potential to address current and future challenges.

The approach to solving potential AI-related problems with stringent regulations and a slowdown in innovation is akin to cutting off the nose to spite the face. It's a strategy that risks stagnating the U.S. in a global race where other nations will undoubtedly continue their AI advancements. This perspective dangerously ignores that AI, much like the printing press of the past, has the power to democratize information, empower individuals, and dismantle outdated power structures.

The way forward is not less AI but more of it, more innovation, optimism, and curiosity for the remarkable technological breakthroughs that will come. We must recognize that the solution to AI-induced challenges lies not in retreating but in advancing our capabilities to innovate and adapt.

AI represents a frontier of limitless possibilities. If wielded with foresight and responsibility, it's a tool that can help solve some of the most pressing issues we face today. There are certainly challenges ahead, but I trust that with problems come solutions. Let's keep the AI Decels from steering us away from this path with their doomsday predictions. Instead, let's embrace AI with the cautious optimism it deserves, forging a future where technology and humanity advance to heights we can't imagine.